Use Cases

Forward customer data to Snowflake for analysis and storage

Watch an end-to-end walkthrough of this tutorial here.

Using a CDP to handle cross-platform data collection, data governance, and data quality management, then forwarding these events to a Data Warehouse for analysis and long term storage is an effective strategy for leveraging the unique capabilities that both of these tools offer. With your customer data stored in a Data Warehouse like Snowflake, you can easily query historic data to perform longitudinal analysis on your customers, and combine your customer data with information from many other sources.

In this tutorial, you will learn how to:

- Collect eCommerce customer data with mParticle

- Forward this data from mParticle to Snowflake

- Query this data in Snowflake to retrieve targeted customer segments

Step 1: Integrate mParticle’s SDK into your eCommerce client

The first step is to implement event collection code throughout your eCommerce app using mParticle’s SDKs. When doing this, we recommend starting with a Data Plan. For best practices on how to create and maintain a data plan using mParticle's data planning tools, visit this blog post.

Step 2: Set up your warehouse, database, and schema in Snowflake

Next, you will need to instantiate the warehouse, database, and schema within Snowflake to which you will eventually forward your eCommerce data. You can do this by running the SQL below within a Snowflake Worksheet:

-- Create a warehouse and choose the appropriate size. We use AUTO_SUSPEND of 10 minutes (600 seconds) as an example. Please adjust accordingly if needed.

CREATE WAREHOUSE mPeCommerceWarehouse WITH WAREHOUSE_SIZE = 'XSMALL' AUTO_SUSPEND = 600 AUTO_RESUME = TRUE;

-- Create database

CREATE DATABASE mPeCommerceDatabase;

-- Create schema

CREATE SCHEMA mPeCommerceSchema WITH managed access;Step 3: Create roles and manage user permissions in Snowflake

Now you’ll need to create a role with permissions to manage the database you just created within Snowflake, again by running direct SQL commands:

-- Create new role:

CREATE ROLE data_loader;

-- Grant access to your warehouse, database and schema

GRANT USAGE ON WAREHOUSE mPeCommerceWarehouse TO ROLE data_loader;

GRANT USAGE ON DATABASE mPeCommerceDatabase TO ROLE data_loader;

GRANT ALL ON SCHEMA mPeCommerceSchema TO ROLE data_loader;

-- Create user with your new role

CREATE USER mparticle_user

MUST_CHANGE_PASSWORD = FALSE

DEFAULT_ROLE = data_loader

PASSWORD = "STRONG_PASSWORD_HERE";

GRANT ROLE data_loader TO USER mparticle_user;Step 4: Enable the Snowflake integration within mParticle

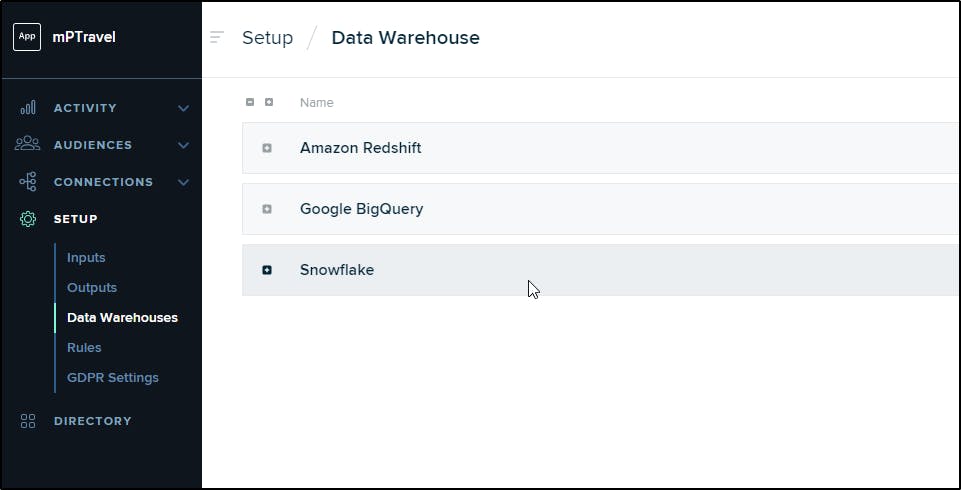

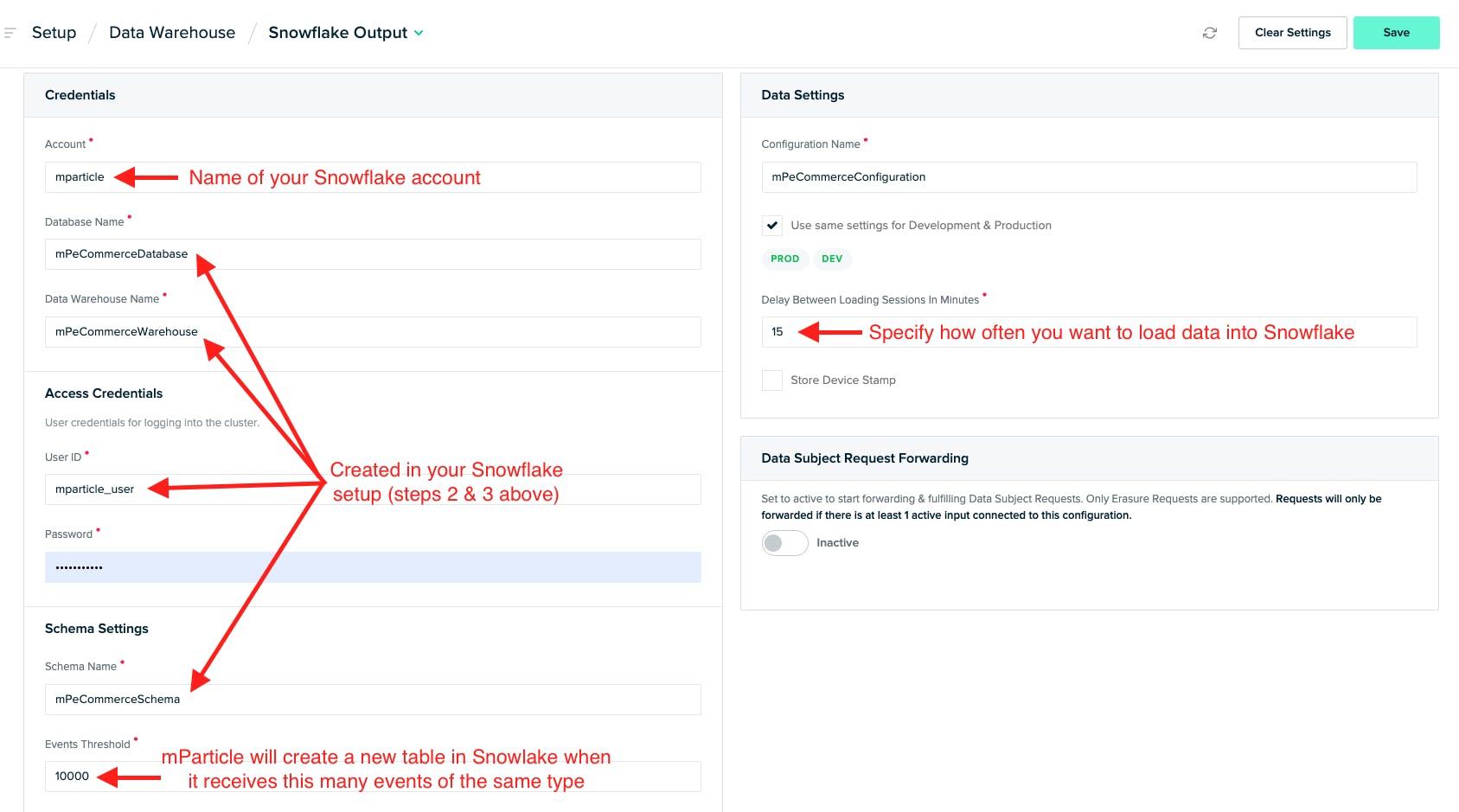

In your mParticle workspace, select “Data Warehouses” under “Setup” in the navigation menu. Select Snowflake, then click on the plus icon to name and add a new Snowflake configuration.

With the “Settings” tab of your Snowflake configuration, enter the database, warehouse, and schema names you created in Snowflake, along with the user ID and password you set for your dedicated user. Here you’ll also supply an event threshold, which specifies the number of events of the same type that mParticle must receive before it creating a dedicated table for that event in Snowflake, and specify a loading delay which tells mParticle how frequently to forward event batches to Snowflake.

Navigate to Connections / Connect in the mParticle UI to connect individual inputs to your Snowflake output. In this example, you should select the “Web” input since you be forwarding events from the eCommerce app you connected to mParticle in step 1 above.

Step 5: Verify your data connection in Snowflake

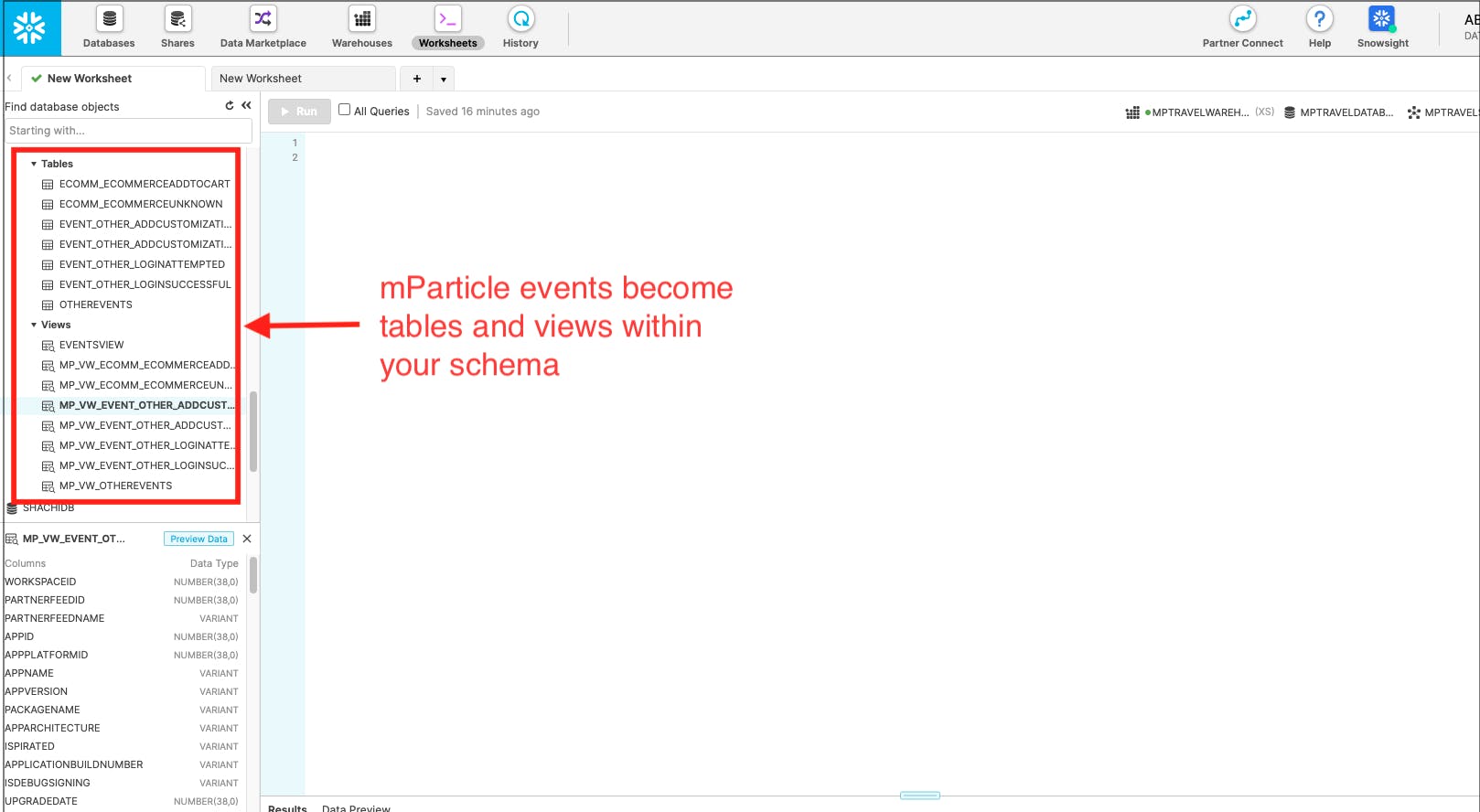

Once Snowflake has received its first event batch from mParticle, you will see this data represented as tables and views within your Snowflake schema.

Step 6: Query your data to retrieve targeted audiences

Now that your eCommerce data is being forwarded to Snowflake, we can write SQL queries directly within our Snowflake Worksheet to retrieve targeted customer segments. In this example, let’s assume that our product team has an educated hypothesis that customers who select red couches have a higher than average likelihood to make repeat purchases. Since we are logging an “Add Customization–Color” event in mParticle and forwarding these events to Snowflake, we can easily pull this audience with a simple SQL query.

SELECT EMAIL, MPARTICLEUSERID, EVENTTIMESTAMP, EVENTATTRIBUTES

FROM “MPECOMMERCEDATABASE”.”MPECOMMERCESCHEMA”.”MP_VW_EVENT_OTHER_ADDCUSTOMIZATIONCOLOR”

WHERE EVENTATTRIBUTES[‘color] = ‘red’Excellent! Since we were capturing email as a user attribute along with these events, we can now export this targeted audience as a CSV that will allow our marketing team to contact these customers with targeted offers. Additionally, since we more than likely have customer email identifiers in data sets from other sources, we have the option of running JOIN statements to combine this data set with information from other sources stored across our Data Warehouse.