Prevent data quality issues with these six habits of highly effective data

Maintaining data quality across an organization can feel like a daunting task, especially when your data comes from a myriad of devices and sources. While there is no one magic solution, adopting these six habits will put your organization on the path to consistently reaping the benefits of high quality data.

In order for data-driven teams to make effective and timely decisions, they need to trust that the information at their disposal is accurate, complete, and up to date. Since modern companies ingest data from a great number and variety of sources, avoiding data quality issues can be a significant challenge. Following these six habits can help you consistently supply growth teams with the high-quality, reliable information they need, and avoid the pitfalls of messy, incomplete, and misleading data.

Habit 1: Collect your own data

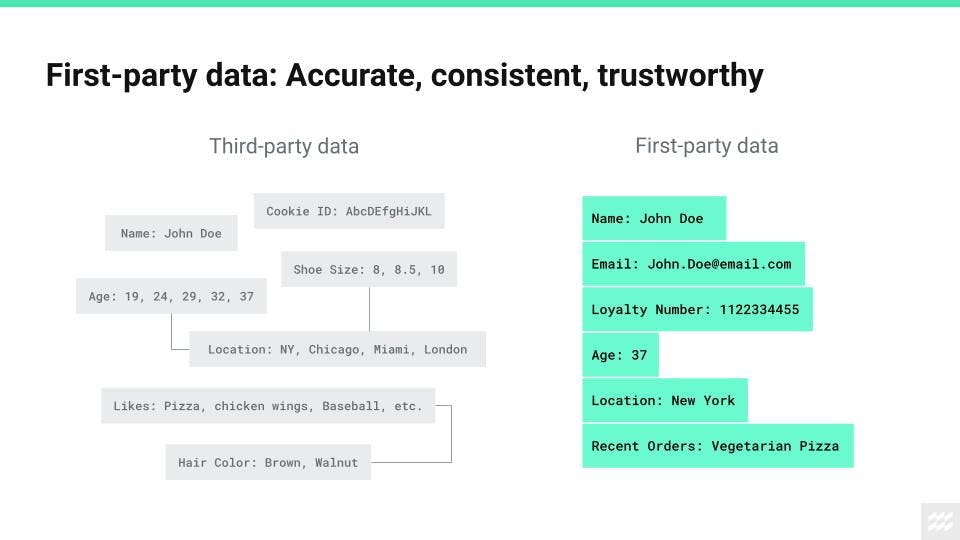

An old adage says that if you want something done right, you should do it yourself. If you want your data to be accurate and reliable, you should collect it yourself, from sources that you own. Information gathered directly from your company’s owned digital touchpoints is called first-party data. This should not be confused with third-party data, which is information acquired from a variety of external sources and used to power your marketing, advertising, and business intelligence initiatives.

Since third-party data is not restricted to the individuals currently engaging with a company’s apps, websites, and other properties, these datasets are often more extensive than those collected from owned channels alone. Additionally, third-party data can often be easier for companies to acquire, since it does not require the strategic and technical investment that implementing a first-party data plan requires.

Convenience is where the advantages of third-party data end, however. When it comes to quality and reliability, third-party datasets can often have conflicting, out of date, and generally hazy records. On the contrary, first-party data is much more likely to be up to date, consistent, and transparent.

With greater trust in the quality of your data, you can have much more confidence that these personalized experiences you create for customers will be meaningful and of interest.

Habit 2: Focus on the “why”

You likely have many channels on which customers interact with your brand. The more complex customer journeys become, the more opportunities there are to collect data on your users’ actions and behaviors. While it can be tempting to slide into a “more data is always better” mindset, it is important to resist putting the cart before the horse when it comes to your data strategy.

Use cases and business goals should always inform the data points you collect, not the other way around. When cross-functional data stakeholders come together to develop a data plan, they should define the business outcomes they want to realize with data. Once these scenarios have been clearly articulated, the team can then move to identifying the data points that will aid in achieving these outcomes.

When you anchor on the “why” behind the data, you’re much more likely to end up with clean, lean, and actionable data, and not an expansive sea of information that ultimately results in analysis paralysis.

Habit 3: Establish consistent naming conventions

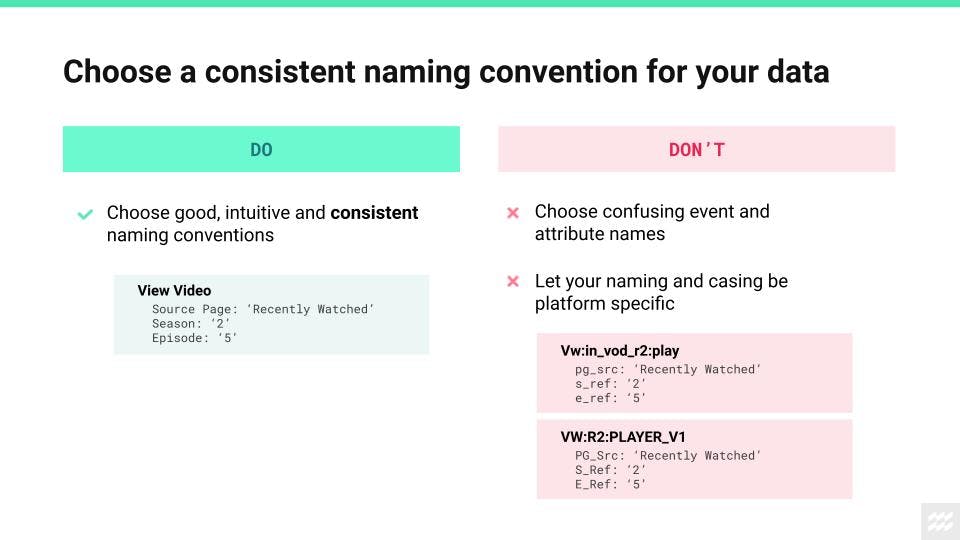

In order to maintain a cohesive picture of your customers as they interact with your brand across these channels, it is essential to make sure that the data you’re collecting is consistent across these channels.

A critical aspect of establishing consistency across your incoming data is to create universal naming conventions for data events and attributes. When deciding on how to name your data, use syntax that is valid across all programming languages your engineering teams are using. Names should be intuitive and human-readable, and make it easy for someone unfamiliar with the data plan to understand what data is being collected at a glance. When data is self-descriptive, it is less error-prone and easier to work with once it has been ingested:

Habit 4: Create a data plan, and use it as your source of truth

Developing and executing an effective data strategy is a team sport. Given the complexity of collecting and activating data in a modern organization, however, it is extremely unlikely that multiple reference documents will remain up to date with all of the pertinent details of the data strategy. That’s why it is advisable to create and maintain one data plan as your source of truth that keeps teams aligned on this strategy.

This data plan should be one single document (usually a spreadsheet) that serves as an organization-wide reference for all information pertaining to data collection and implementation. Some key details that the data plan should include are the names of the data events, attributes, and user identities that will be collected, as well as conventions for naming these data points. While this plan can and should evolve with the needs of data-driven teams, it is critical that the data plan exists as one single record accessible to every stakeholder who needs it, rather than fragmented documents stored in silos across the organization.

This blog post provides more detail on best practices for establishing a cross-functional data planning team. Additionally, if you are interested in kicking off a data planning practice at your organization but unsure of where to begin, this post will help.

Habit 5: Define generic events, and specific attributes

Remember when we said that we should always let specific use cases define the data we want to collect? In addition to thinking about business use cases when modeling your data, you should also bear in mind how that data will eventually be analyzed and queried. What are the things your teams will want to see on a regular basis? What types of audiences will you be creating? What are the customer behaviors that you want to understand?

Most of the data that you will be collecting will describe actions that your customers perform as they interact with your digital touchpoints. Often, customers will perform the same type of action several times as they move through your websites and apps. For the purposes of analyzing these events,it is best to use a generic label to describe the event itself, then use event attributes to capture the specific details of the action.. Having generic names to describe all events of a specific type rather than granular labels that specify individual events makes this data much more meaningful and easier to understand and analyze.

In the case of an eCommerce site, for example, a customer may view dozens of product pages per session. Rather than creating a new event for each individual product page––for example, “View Red Sweater” and “View Green Sweater”––create a generic View Product event, then attach information describing the specific product like “category,” “color,” “price,” and “SKU” to this event at attributes.

This way, when your “View Product” events start populating, it will be much easier to work with this data. If you want to know how many customers viewed products over $50, for example, you can simply filter the “View Product” event for records where the “price” attribute is greater than 50. If we had more granular event names, we would have to perform this filter operation on “View Red Sweater,” “View Green Sweater,” and all other individual “View Product” events, then add them together.

Habit 6: Validate your data early and often

Even with a thorough data plan and close alignment between growth teams and engineers, mistakes can happen. Bad data can slip past initial quality checks and into your internal pipelines. In Data Pipelines Pocket Reference: Moving and Processing Data for Analytics, author James Densemore highlights how common flaws in data like duplicate, orphaned, or incomplete records, text encoding mistakes, mislabeled or unlabeled data points, and others can lead to significant problems with activation and analysis if they are not detected and corrected.

It is critical to create and implement processes to validate data––not just once, but at several stages along the data lifecycle. While there are many ways to validate data, the purpose of these checks is to ensure that data at a given point along the pipeline matches the expectations laid out in the data plan. Learn more about what good data validation looks like here.