How to choose the right foundation for your data stack

If you’re relying on downstream activation tools to combine data events into profiles, don’t. You’ll end up with fragmented and redundant datasets across systems. Enriching each data point before it is forwarded downstream will prevent this problem, but not all customer data infrastructure solutions deliver this capability.

When the data at your disposal is consistently accurate, up-to-date, and reliable, your organization will benefit from a faster time-to-data value, and stakeholders will not miss out on opportunities to improve customer experiences. Teams across the organization will look to data as a valuable asset rather than a challenge, which will support a data-driven culture throughout your organization.

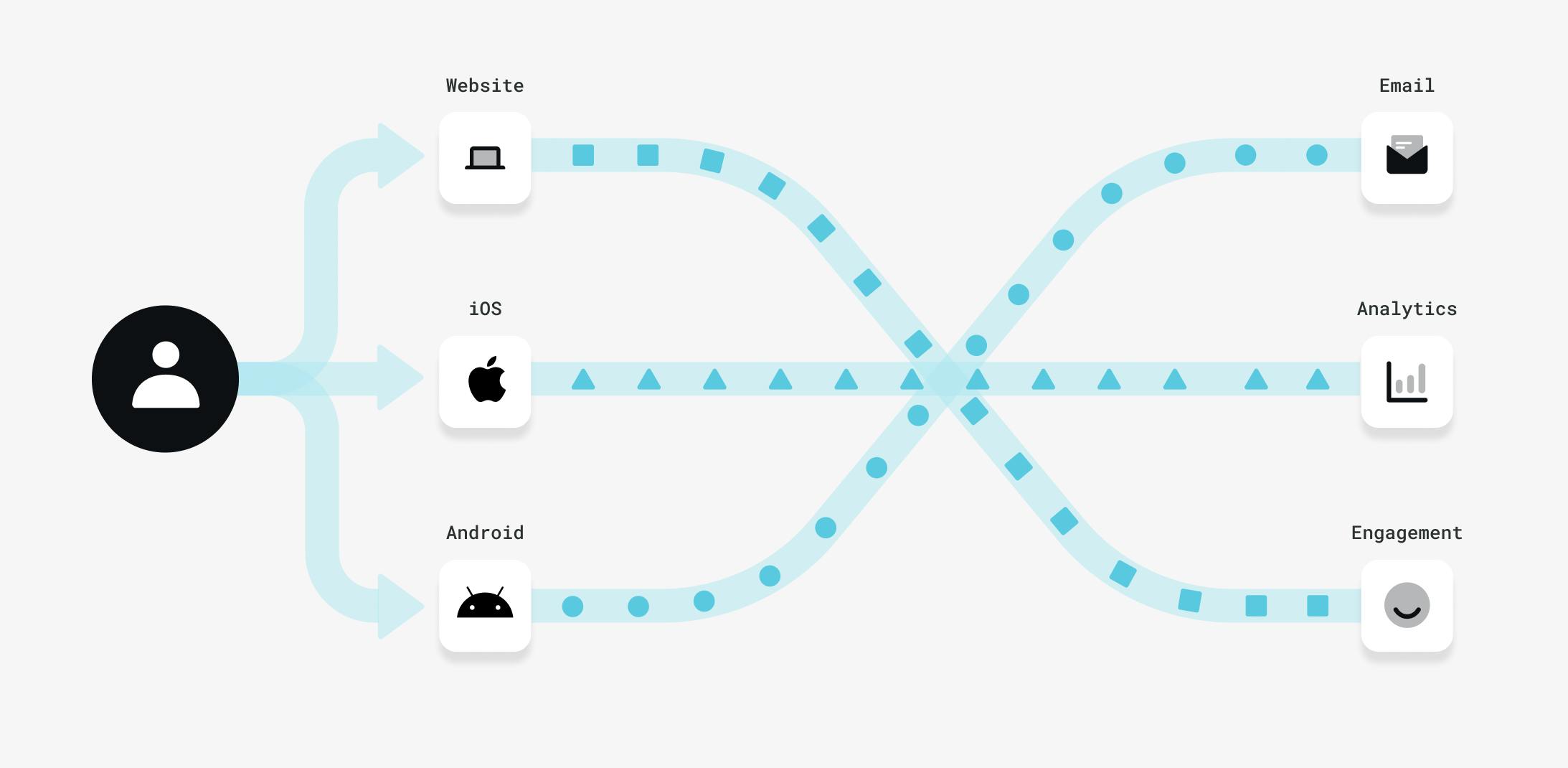

Of course, collecting data alone does not guarantee these outcomes. One of the biggest obstacles standing in the way of achieving results like this is data silos, and the erosion of data quality that occurs when customer data lives in separate systems. This threat only increases as your teams begin incorporating more downstream activation tools. Failing to put processes in place that enrich your data before it reaches these downstream systems will lead each tool to have a unique, incomplete picture of your customers. When this happens, teams can easily make decisions based on inaccurate and incomplete information, and the effort that went into strategizing and implementing data collection will not have paid off the way it could have.

When your data is flowing to multiple destinations, sending events directly to these disparate systems without enriching them first will eventually undermine your data quality. In this article, we will explore how this happens, and what you can do to prevent this.

Maintaining separate SDKs for each downstream system

Whenever your team adopts a new third-party tool for activating data, one option for sending data to that system is to implement its SDK directly into your apps and websites. If this is the only third-party solution you anticipate having to integrate into your products, client-side integration is not a problem. In reality, however, the likelihood that you’ll only want to deliver data to this one tool is very slim, and your team’s tool requirements will likely change over time. As soon as more downstream systems are adopted, the problems with this approach begin to arise.

The immediate problem

One of the most immediate pitfalls of integrating a myriad of separate SDKs is the technical overhead this entails. Maintaining multiple integrations can quickly become unsustainable for engineering teams, as each time the marketing or product team wants to track an additional user behavior, developers will have to write and deploy code to do so. Aside from implementing each new data event separately, vendor API updates can trigger additional development cycles as well, as that vendor’s integration may need to be reconfigured and redeployed. The more updates and maintenance work your developers do, the less time they can spend on building and shipping core features. Furthermore, this becomes a serious bottleneck for data stakeholders as well, since these engineering cycles also block marketing and product teams from activating this data.

An imperfect solution

Clearly, something has to be done to stop the engineering team from hemorrhaging their hours, and allow growth teams to activate data more quickly. You survey the data landscape, and find that the tools that simplify data collection are many and varied. You reach for a data routing solution like Rudderstack or Metarouter, or a real-time event streaming solution like Snowplow Analytics that ingests data from all sources through a single SDK, then forwards it to individual downstream systems. That’s essentially all this tool does, however. It can be though of as a “dumb pipe” since it does not enrich this data or provide any functionality beyond routing your events to their destinations. When your data arrives in downstream systems, it is still in the form of isolated events, not unified customer profiles.

After integrating this system into your products, the storm appears to subside. Engineers have their time back, and growth teams can get data into their engagement and analytics tools with far less friction. Initially, it appears that this solution has solved the problem, and everyone is happy. That is, however, until another another issue begins to emerge.

Fragmented copies of your data

In addition to this unmanageable technical overhead, another problem has been brewing since you began collecting your first events, and the tool you adopted to simplify data ingestion has done nothing to prevent it.

As each tool has been receiving data events, it has been combining them with other events that it determines have been performed by the same user. This process is known as identity resolution, and it is what transforms individual data points into complete pictures of your customers. Since each separate system is performing identity resolution on its own, however, redundant and often conflicting copies of your data have started to emerge in each separate tool.

Let's explore in more detail what has led to this situation. Say, for example, you collect a data event from your iOS app. The only information within this event that can associate it with an individual user is the mobile device identifier, which in this case is the IDFA. Your product team forwards this event to Amplitude, and when it arrives, Amplitude compares the IDFA to those on profiles already in the system. It doesn’t find a match, so it creates a new profile using this IDFA as an identifier.

Amplitude isn't the only tool where this event is being sent, however. Your marketing team also forwards it to Braze where they can leverage it in engagement campaigns. Since these two tools enable different types of use cases, it is likely that one of them will contain events that the other does not have. In this example, let’s say Braze has already received numerous events tied to a profile using this IDFA as a unique identifier. When this new event arrives, Braze appends it to this known user profile. You now have the same event tied to two separate user profiles in different tools, but in reality, this is the same user.

Over time, this problem compounds as your teams adopt additional tools for data activation. Each system will develop its own copy of your data, and these copies develop more inconsistencies. With inconsistent and incomplete profiles siloed across downstream tools, marketing and product teams will miss opportunities to reach customers and improve user journeys. What’s worse, they may even deliver irrelevant messaging or offers based on inaccurate data.

Without identity resolution, downstream systems have a fragmented and incomplete view of your customers.

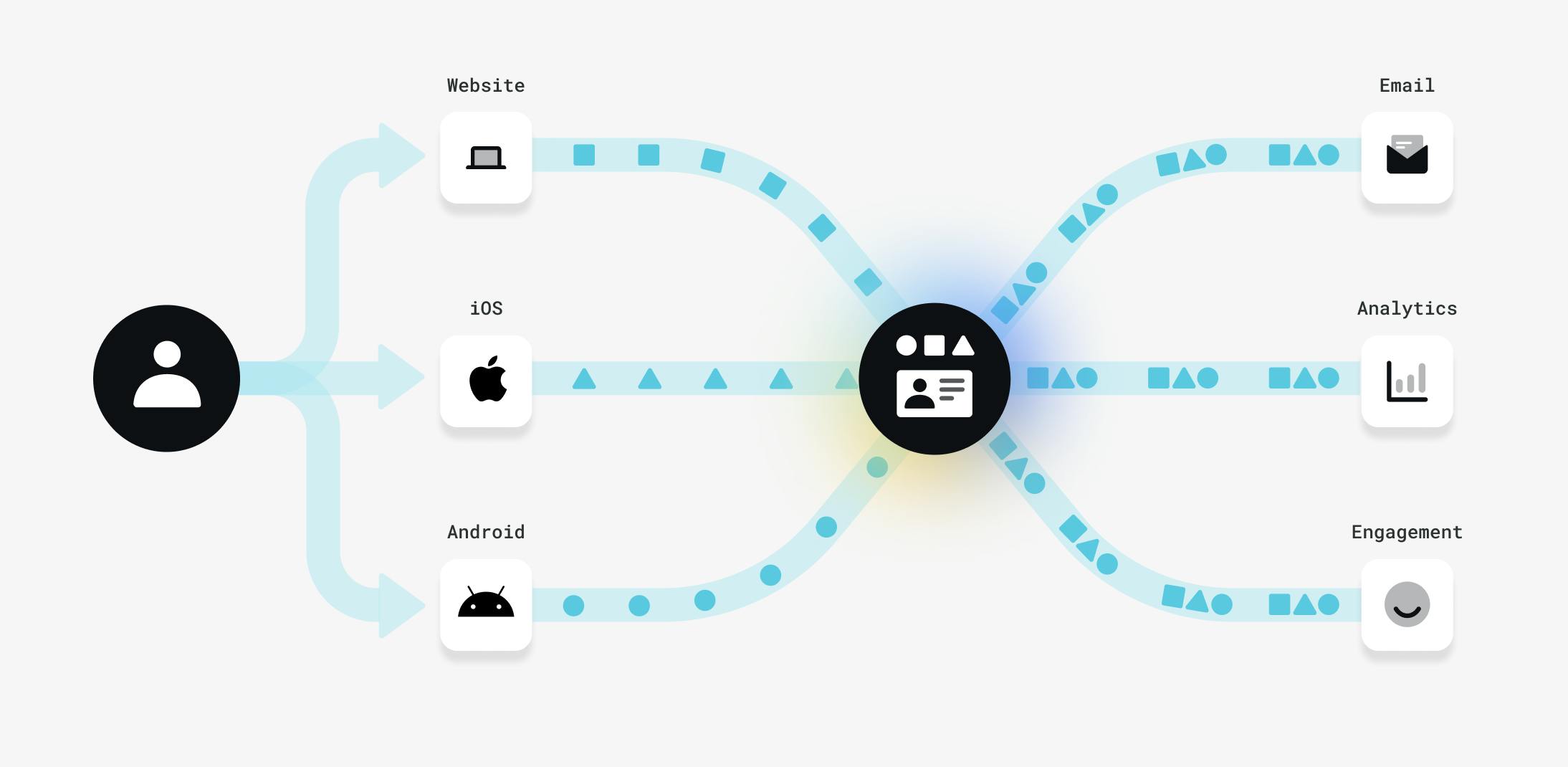

The long term solution: Develop an identity resolution capability

Avoiding this problem requires combining data into unified profiles before, not after, it enters activation systems. This begs another important question about your data stack: What is the best way to establish an identity resolution capability? The two main approaches––having your data engineering team build an identity resolution strategy, and adopting a data infrastructure tool that delivers it out-of-the-box.

Performing identity resolution gives each activation system a complete view of your customers.

Building identity resolution in house requires your data engineers to conceive of a strategy for recognizing when multiple events across customer touchpoints were performed by the same customer. Then, they need to build the data transformation processes to both associate these data points with a single user profile, and continuously update user records as customers continue to produce data events. While this strategy works for some companies, it can quickly become an unsustainable drain on technical teams, and a home-grown solution can be difficult to scale and adapt to the evolving needs of growth teams and changing privacy regulations.

Another approach is to “buy” rather than build this capability by investing in a data infrastructure solution such as a best-in-class CDP. mParticle’s IDSync, for example, gives customers the power and flexibility to create a unified view of the customer without demanding ongoing engineering overhead. It also delivers the ability to continuously update your identity resolution strategy, and take control over data governance, policy, and security. Since mParticle’s IDSync is based on a deterministic rather than probabilistic identity resolution strategy, new user events are only added to an existing record when the two share a known identifier, meaning this match is always made with 100% confidence. Deterministic rather than probabilistic matching should always be the basis of your core identity resolution strategy, and this blog post explains why.

Interested in learning more about the technical tradeoffs between building identity resolution in house and leveraging a CDP to handle this process? This article provides further detail.