Everything you need to know about data integrations

Data integrations are ubiquitous throughout the SaaS ecosystem. But not all data integrations are created equal. This article walks through the different types of integrations commonly available and provides tips on how to choose the right integration types for your use cases.

For great analysts, product managers, and marketers, customer data is like paint.

One color is great, but it can only get you so far (though Ryman and his peers may disagree). True insight comes when you’re able to mix colors and create a unique perspective.

The customer data sets that live within each individual system–payment data in your commerce tool, support records in your customer service tool, A/B test results in your analytics tool–are each valuable in their own right. But it’s not until this information is connected across systems that you’re able to unlock business-changing insights and build impactful customer experiences.

The process through which data is shared across tools and systems is referred to as integration. As businesses have moved away from managing multiple functions within a single cloud suite, such as Adobe or Oracle, and towards building a best-in-breed stack of independent vendors, and additionally as customer data has begun to be leveraged by departments beyond marketing (such as customer service), integrating data across tools has become increasingly important. Today, software vendors big and small offer a vast library of integrations to help you connect data across your tech stack.

But with integrations becoming so ubiquitous across the SaaS ecosystem, it’s worth considering: Are all integrations created equal?

When evaluating any tool, especially Customer Data Platforms, it’s important to ensure that the integrations on offer match your planned use cases. This blog post will break down the different types of integrations commonly available and provide details on which use cases are best supported by each type, hopefully helping you navigate the ever-expanding SaaS ecosystem and make smarter vendor decisions.

Batch processing API integrations

First, let’s break down batch processing integrations. Batch integrations use a REST API or scheduled file imports to forward large data sets from one system to another at a regular cadence. This cadence could be hourly, daily, or weekly, depending on the size of each batch and how it is being processed before forwarding. During batch processing, it’s common for data points to be transformed as they leave the source system to match the data schema of the destination system, helping to make data actionable once it has arrived. This is commonly referred to as an ETL operation (extract, transform, load).

The typical batch processing integration architecture consists of the following components:

- Original data ingestion: Data collection from the digital properties where events are being created, such as mobile apps or websites

- Data storage: A distributed file store that serves as a repository for high volumes of data in various formats

- Batch processing integration system: Solution that processes data files using long-running batch jobs to filter, aggregate, prepare data for analysis

- Analytical data store: System that can receive, store and serve processed data, and enable data consumers to access that data through an interface

Batch integrations are extremely useful for transferring large data sets between systems on an asynchronous basis, such as when reporting on historical data, moving data to long-term storage, or training a machine learning model. They are less useful for use cases that need to be powered by a flow of real-time data, such as purchase confirmation emails. For time-sensitive workflows, it’s important to transfer data with a real-time streaming integration.

Streaming API integrations

In contrast with batch integrations, which share groups of records at a regular cadence, real-time streaming integrations share individual records as soon as they are made available. The speed of streaming integrations is often measured in milliseconds, rather than hours or days.

Once you have a streaming integration connected between two systems, the destination system will begin to receive data from the source system as it becomes available on an ongoing basis. This capability is particularly critical for tools such as Customer Data Platforms, which provide value by making it easy to get real-time data into all of your marketing and analytics systems as it is created in client-side and server-side environments.

The typical streaming integration architecture consists of the following components:

- Real-time message ingestion: A system built to capture and store real-time messages from client-side sources such as mobile apps, and websites, and OTT devices as well as server-side environments

- Stream processing: A system for processing real-time messages, which filters, aggregates, and otherwise prepares data for analysis in a matter of milliseconds

- Data destination: System that can receive and store processed data in real time and make it available to data consumers for real-time workflows

Today’s customers move fast. To meet their expectations, certain aspects of the customer experience need to match their pace. Time-based engagements such as triggered in-app messages, transactional emails, and display advertisements must be powered by integrations that enable you to move data between systems quickly.

Streaming API integrations with event enrichment

Streaming integrations provide value by helping you forward events from a source system to a destination system as they’re created. But what if you wanted to get more than just the original event to your destination system?

Some platforms offer advanced streaming integrations that allow you to enrich events with any relevant data that platform has in storage as they’re collected and forward those enriched events to the destination system. For example, instead of simply normalizing a purchase event and forwarding it downstream, that purchase event can be tied to a customer profile via an identity resolution architecture and enriched with profile attributes, such as Lifetime Value or consent state, or additional identifiers, such as email, as it is forwarded. Event enrichment is important because it provides a more comprehensive view of your data and helps you support data privacy more effectively. For example, an Email Service Provider (ESP) may require an email address (in order to deliver emails). But an incoming data point is sourced from a mobile device, where you only have IDFA or IDFV, that event is "useless" to your ESP (if it doesn't support inherent identity resolution capabilities) as it doesn't know which user to associate the event with.

When evaluating systems that offer streaming integrations with event enrichment, it’s important to make sure that they allow you to control which enriched attributes are forwarded to other tools. Many SaaS tools will charge you based on the number of data that you are sending into their system, so it’s important to be able to manage what data you are sharing. Data minimization is also a data privacy best practice.

Audience integrations

Thus far, we’ve been focused on integration types that allow you to forward event data across systems. Many integration use cases, however, depend not on sending a list of events, but on sending a list of user records.

Audience integrations allow you to forward a segment of user records defined in one system to another system. Audience integrations can be built with either bulk or stream processing, both of which have different advantages and and serve different types of use cases:

- Bulk processing-based audience integrations send an updated record of audience membership at a regular cadence, such as once a day

- Stream processing-based audience integrations share any updates to audience membership as they occur, helping you keep your segments up-to-date across systems in real time. If the destination tool offers an Audience API that lets you add, update, or remove memberships, you can manage memberships directly

When evaluating audience integrations, it’s important to select the right processing type for your use case. For example, if you’re aiming to forward audiences across systems to power personalized messaging, it’s important to use a stream processing-based audience integration. Using bulk processing for this purpose may lead you to deliver a personalized product offer to a customer who has already bought that product in the time since the audience was last updated. For non-time sensitive audience use cases, such as analyzing the past purchasing behavior of a customer segment, bulk processing-based audiences are a suitable choice.

But faster is always better, right? Not necessarily. On the data processing side, streaming requires a system that is able to support high-volume writes, which can be expensive. On the data consumption side, destination tools need to allow you to activate, analyze, and connect data as it is made available. For this reason, stream processing integrations are not the best option for all circumstances.

Audience integrations are key for successful user data activation. No matter how rich your data is, leaving it in dashboards and databases often leaves marketers frustrated that they can’t execute user experience ideas. Audience integrations fill this gap by connecting user data from all the systems and all the teams (even the cool ML-augmented traits you've built in-house) to communication channels, making it possible to deliver personalised messaging (emailing, remarketing, notifications etc.).

Chief Technology Officer at Human37

Bi-directional integrations

The most successful teams use data to evolve the customer experience over time, testing-and-learning across channels. From a technical perspective, doing so requires more than being able to send data from point A to point B.

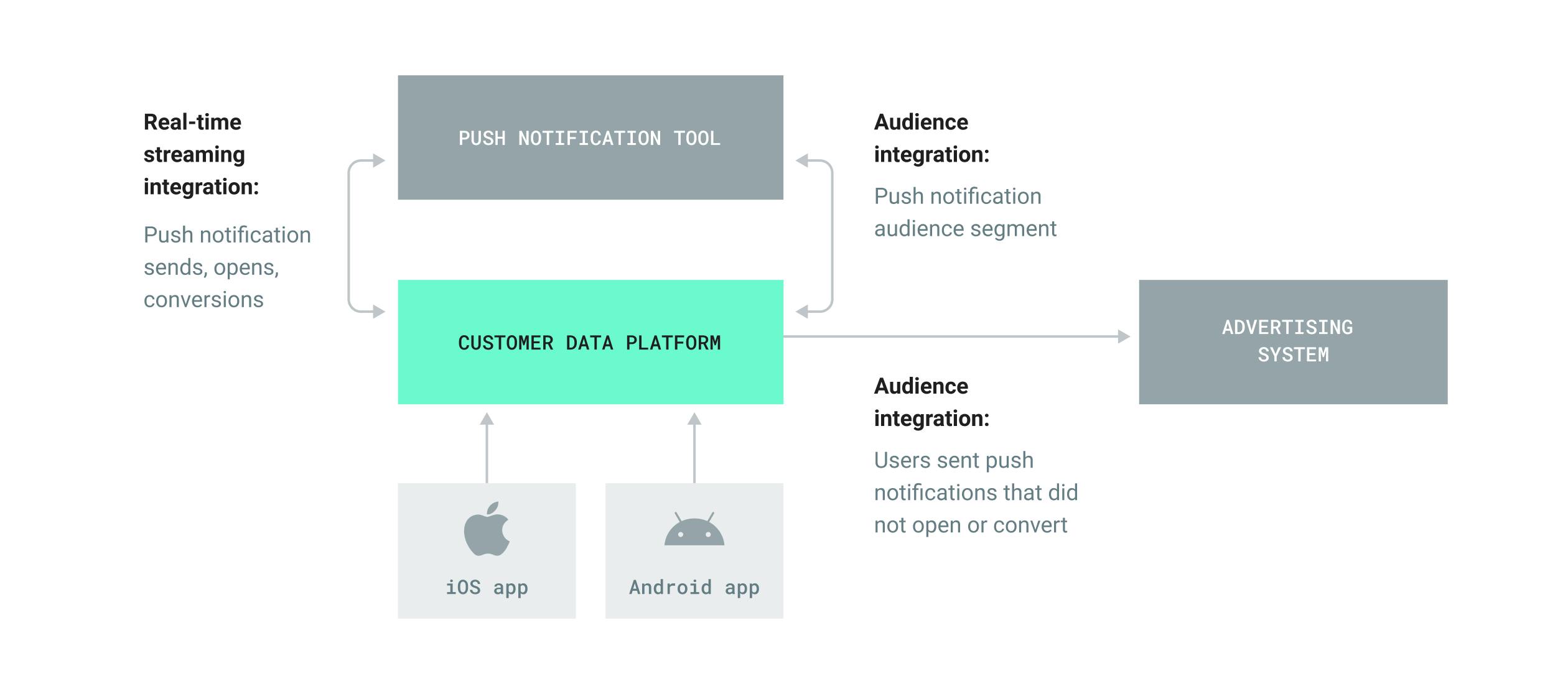

Bi-directional integrations allow you to both send data to a destination and receive data from that same destination. Through bi-directional integrations, you can build round-trip data flows that make it easier to surface insights and apply them to decisions. Let’s walk through an example:

Say you want to send a targeted offer to a group of new mobile app users. To begin, you would forward an audience segment from your CDP to your push notification tool using your CDP’s Audience integration with the push tool. Next, you would use the audience received as the target segment for the push notification experience you have designed. As the push notifications are delivered, you can ingest notification engagement data from your push tool into your CDP using a real-time streaming integration offered, thus completing a round-trip data flow. Finally, you can use this push engagement data, now available in your CDP, to define an audience of users that were sent the push message but did not open the message or later convert. This audience can be connected to your advertising system of choice via an Audience integration for retargeting.

Tips for evaluating integrations

As you evaluate SaaS tools, especially Customer Data Platforms, it’s important to always validate that the integrations promised by a vendor meet your needs. A few guiding questions that you can keep in mind are:

- Can this integration forward data fast enough for my use case?

- Can my existing tools receive data at the speed at which this integration will forward it?

- Can this integration enrich forwarded data with the attributes I need (customer identifiers, consent state, etc.)?

- Can this integration share complete audience segments?

mParticle supports a number of kinds of integrations, including streaming processing-based integrations with enrichment, real-time and batch audience integrations, and bi-directional integrations (which are called Feeds in the mParticle platform). You can learn more about mParticle integrations and browse our library by integration type here.

Additionally, learn how mParticle integrations can be used to power use cases across marketing, product management, and data engineering in our integration use case library here.