Navigating the CDP Noise: Composable sleight of hand

In part one of this two-part series, mParticle CEO and co-founder Michael Katz explores the debate between "composable" vs "packaged" CDPs, offering guidance for organizations trying to understand which type of solution is right for their needs.

In 2013, when we launched mParticle — the mission was clear. Create a win/win by simplifying the process to connect customer data to the various marketing tech applications used by teams across the organization, while reducing engineering overhead simultaneously. The value created was in removing friction around operationalizing data to the full set of marketing, advertising, and analytics tools.

Fast forward ten years, and a lot has changed. Notably, with the rise of the cloud data warehouse (CDW), many organizations are centralizing data management by leveraging the CDW at the center of their data architecture. In 2013, cloud data warehouses were in their infancy and adoption was very early. Most companies, mParticle included, who built data solutions also built proprietary data stores out of necessity — a means to an end.

A warehouse-centric architecture makes sense for many organizations, a single universal data store comprising all of the data created across the org, made available for future use.

While there has been a debate about “packaged” vs “composable” CDPs, the heart of the argument centers around the role of the data warehouse as the universal storage layer. Contrary to the current narrative, I would argue that the rise of a universal data store further cements the value that CDPs bring, which is the intelligent activation of customer data.

mParticle has come to embrace the cloud data warehouse ecosystem as a viable data storage option for customers, having recently announced data warehouse sync and value-based pricing to focus on value maximization for our customers.

That said, much of the argument is based on sleight of hand product marketing designed to trick the uninformed. They are:

- “Zero data copy” is an outcome.

- Security risks increase with CDPs.

- CDPs are slow to implement.

Below we will breakdown the flaws in each argument, offering alternative conclusions for each.

Trick #1: Zero data copy

The concept of "zero data copy" is often discussed in the context of optimizing data transfer and processing across multiple systems. In theory, data can be moved or processed without being duplicated or copied unnecessarily — aiming to improve performance and reduce resource usage.

Here’s the problem: it does not exist.

As a practical matter, data must be duplicated to applications such as ad partners, customer engagement tools, attribution and measurement tools, loyalty systems, customer support systems, etc. All data is duplicated.

The goal should be on copy minimization and optimizing data transfer efficiency, knowing that achieving a true "zero data copy" solution is not technically possible. There are benefits to the pursuit of data copy minimization, but the arguments in the absolute sense are a creation of product marketers, and meant to distract.

Is duplicating data expensive?

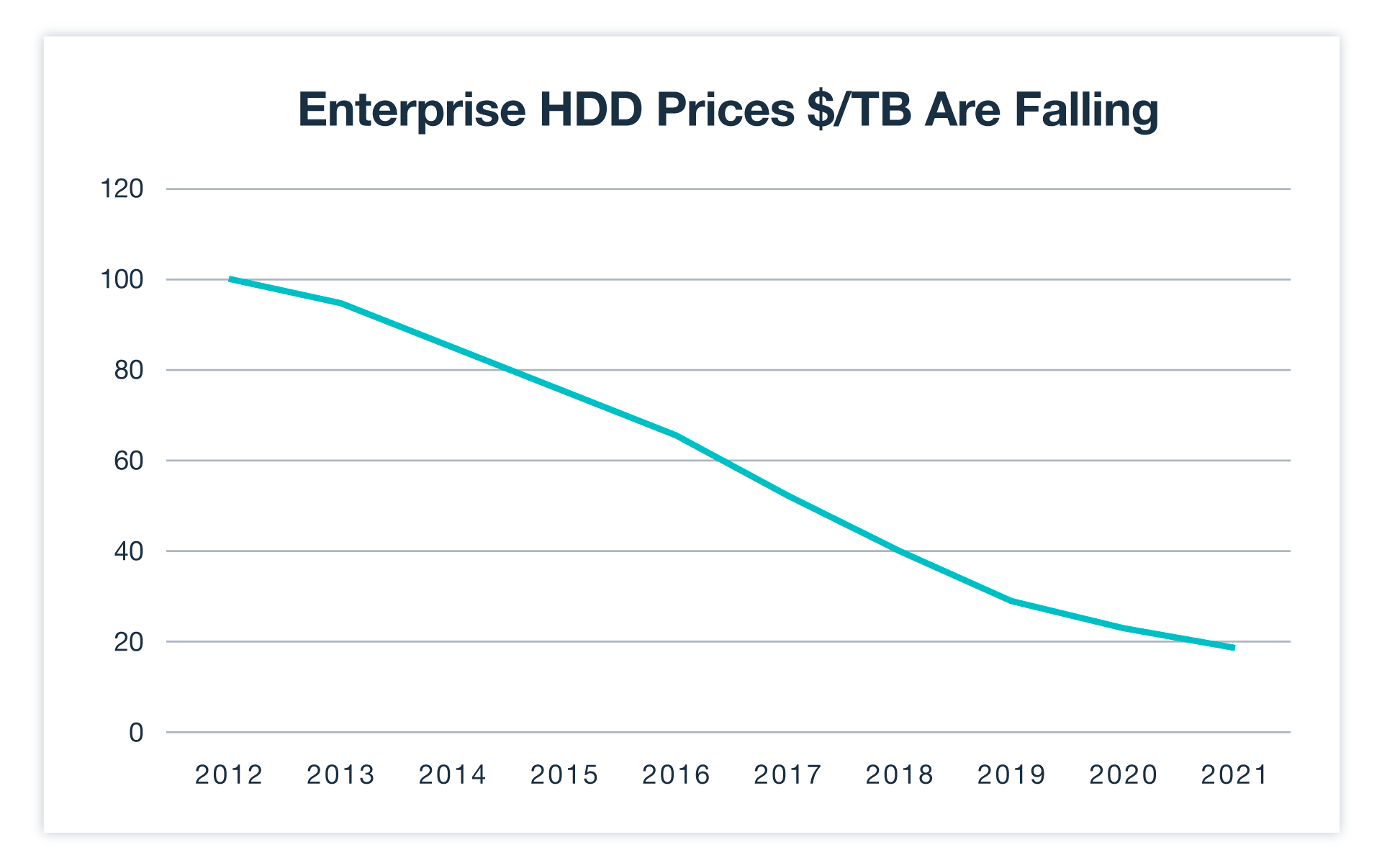

There is a compelling case to be made about minimizing multiple copies of data as means to cost reduction. There is an assertion that it's wasteful to duplicate the data storage but decades of progress have taught us that the actual storage of data is not where the real cost resides. After all, cheap storage is one of the primary reasons cloud data warehouses exist to begin with — separating storage from compute is another.

If the cheap cost of storage is the first hit that gets you addicted, the cost of compute to access the data is the price you pay to keep going. The cost of compute is the biggest cost, both from a time and money standpoint, not the storage.

Does duplicating data negatively impact data quality?

There is another assertion that duplicative data storage across multiple systems has a high cost of ownership since it creates a potential for inconsistencies that must eventually be reconciled. The reality is that data has, and will always be transformed (duplicated) for different intended purposes. This is always a requirement to make use of it. Doing so effectively, and efficiently is certainly made easier when utilizing an opinionated solution, purpose built to address the nuances of customer data.

Trick #2: Security

Fear sells, but security risks are one of the more egregious and baseless claims against CDPs.

By default, all software is insecure, but any software can become secure if the company is committed to making the necessary level of investment. The introduction of a security risk is not inherent in any single type of software but rather speaks to the enterprise grade nature and company culture of the underlying software vendor.

Software vendors must incorporate sophisticated data protection and security features, including built-in redundancy, data encryption, access control mechanisms, and other measures to safeguard the stored data. Requiring 2FA, investment in pen testing, and company training are just a few of the tactical steps here that can and need to be taken, regardless of the solution. Purpose-built tools put the vendor in better control over their security, rather than relying on another company’s roadmap.

If a company is committed to security as a first-order need, there is a far less likely chance that a breach will happen.

Takeaway: generalizing security risks as an inherent characteristic of any category of software is wrong; the risk is specific to the individual company.

Trick #3: Slow to implement

Implementing first-party data collection is work that needs to be done regardless of where you intend to put that data. If you are starting fresh, working with a CDP has the advantage of having built the most robust and mature platforms to ensure quick deployment and the highest data quality.

On the other hand, if you already have your data in a data warehouse through some other means, and you are simply looking to activate it, you don't need to reinvent the wheel and can leverage functionality such as data warehouse sync to leverage a wider ecosystem of data quality and options and be up and running in a matter of minutes.

As a matter of practice, data quality is usually where customers get tripped up. The CDP is a system designed for the intelligent movement of data. This requires a focus on data integrity. A solution without these capabilities offers no protection against the issues that inevitably arise and undermine your digital strategy. After all, it's still garbage in — garbage out. Just a bit faster.

Takeaway: fast garbage is still garbage. Time to initial value is different than time to sustainable value.