How to harness the power of a CDP for machine learning: Part 3

Learn how you can activate machine learning insights across analytics and customer engagement platforms in part three of this three-part series.

This is part three of a three-part series on generating and activating ML insights with a Customer Data Platform. In part one, we explored the advantages of using a CDP to build machine learning into your core data infrastructure. In part two, we took a technical deep dive into how to set up that infrastructure, using mParticle and Amazon Personalize. In this final part, we'll look at how to harness a CDP to activate on ML insights and to track success.

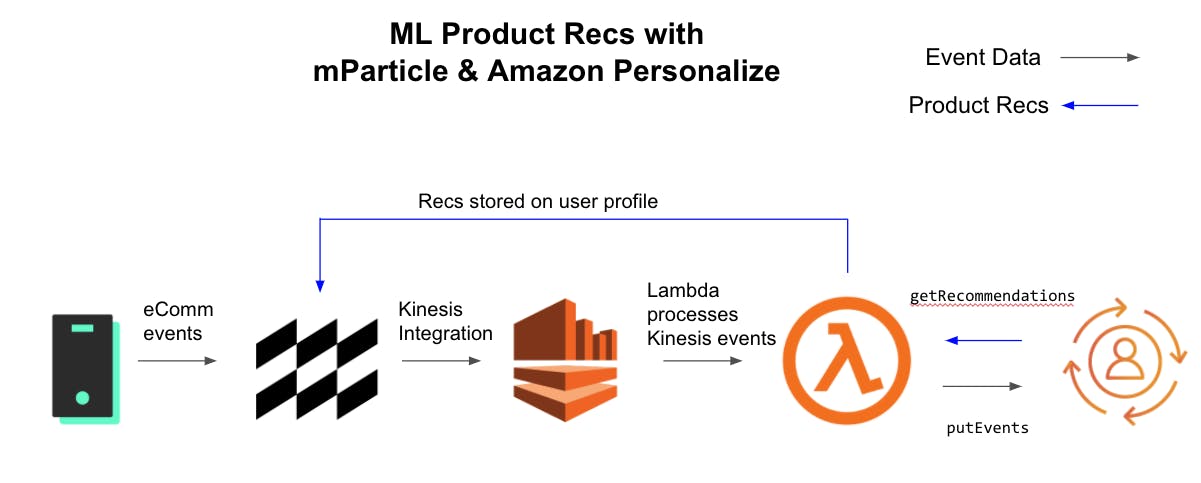

So here's where I was, by the end of part two:

By using mParticle and AWS together, I am:

- Collecting commerce events from my native apps, website and point-of-sale systems and using mParticle's Identity Resolution capabilities to organize the data into a single view of the customer.

- Using commerce events to train and update an ML Model.

- Requesting updated product recs for each user, every time I receive a new commerce event.

- Storing up-to-date recs on mParticle's user profile.

Now comes the great part. Instead of having valuable insights trapped inside a point solution, or having a set of insights gained from a one-off ETL job, my ML insights are baked right into my core data infrastructure. That means I can use insights to deliver personalized experiences from just about anywhere, and that they’ll stay up-to-date with ongoing user engagements.

Using Recommendations

Native apps and website

The most obvious way to use product recs is to display them in the app UI, for example, in a carousel. mParticle provides an API, Profile API, for my app backend to request user attributes from the User Profile. From there I can resolve the recommended product IDs against my catalog to populate my carousel. You can read more about this use case here.

In stores and support centers

mParticle customers have even used the Profile API to personalize interactions in-store via a tablet kiosk, or by phone through call-center automation. Profile API makes ML insights actionable from anywhere you can make an API call.

Messaging platforms

mParticle automatically forwards user data to messaging platforms like Braze. In Braze, this user data, such as first name and last name, is surfaced as personalization Tags. My product recommendations become a "Custom Attribute" tag. I can reference the recs for a user in any Braze content editor using Liquid templating language.

{{custom_attribute.${product_recs}}}This allows me to use my ML recs in any Braze content: emails, push messaging, in-app messages, and content cards. I can also push them back out to native devices via key-value pairs.

Note that in the very basic implementation I've created in Part 2 of this post, the recommendations only include the product SKU. To feed a content template, I'll need more information about the products, such as price, link, and image URL. To get this information I could:

- Expand my Lambda function to import from my product catalogue and store more product detail on the mParticle user profile.

Use Braze's Connected Content API to look up the product info from the SKU. This is the preferred method as it will result in the most up-to-date product information at the time the message is sent. As a best practice, I want to keep my customer data in the CDP, and my product data in my catalog.

Tracking success

Now that we've set up the infrastructure to generate,continuously refine, and activate ML insights across all channels, the final piece of the puzzle is to figure out what works and what doesn't.

For that, I need my Data Warehouse and my analytics platforms, such as Google Analytics or Amplitude. The commerce data I'm collecting with mParticle is already enough to help me identify general trends. For example, I can tell if the average lifetime value of users is increasing since I started applying ML insights.

To dig deeper, I need to understand which ML campaigns I'm deploying for each user, so that I can compare how successful they are. For example, I might want to compare results for my initial product recommendations recipe against results for a control group that sees a default set of products. Alternatively, if I go back and enrich my ML model with additional datasets, or try a different recipe altogether, I'll want to test the new campaign against the original to check that I've actually improved my outcomes.

We've already seen that maintaining a single complete customer profile helped me activate on ML insights across all platforms. The same benefits apply to analytics. By storing experiment and variant information on the user profile, mParticle automatically makes that data available to any analytics tools that you are forwarding customer data to.

I can use a service like Optimizely to set up my experiments, or I can set up a quick A/B test, just by tweaking my Lambda code a little. Below is a version of the Lambda I set up in part 2 of this post, modified to do a few extra tasks:

- Check to see if the current user has already been assigned to the A or B variant.

- Assign the user to a variant group, if necessary

- Request product recs from one of two campaigns, depending on the variant

- Record the variant info on the mParticle user profile.

Changes from the previous version are marked with comments.

const AWS = require('aws-sdk');

const JSONBig = require('json-bigint')({ storeAsString: true });

const mParticle = require('mparticle');

const trackingId = "bd973581-6505-46ae-9939-e0642a82b8b4";

const report_actions = ["purchase", "view_detail", "add_to_cart", "add_to_wishlist"];

const personalizeevents = new AWS.PersonalizeEvents({apiVersion: '2018-03-22'});

const personalizeruntime = new AWS.PersonalizeRuntime({apiVersion: '2018-05-22'});

const mp_api = new mParticle.EventsApi(new mParticle.Configuration(process.env.MP_KEY, process.env.MP_SECRET));

exports.handler = function (event, context) {

for (const record of event.Records) {

const payload = JSONBig.parse(Buffer.from(record.kinesis.data, 'base64').toString('ascii'));

const events = payload.events;

const mpid = payload.mpid;

const sessionId = payload.message_id;

const params = {

sessionId: sessionId,

userId: mpid,

trackingId: trackingId

};

// Check for variant and assign one if not already assigned

const variant_assigned = Boolean(payload.user_attributes.ml_variant);

const variant = variant_assigned ? payload.user_attributes.ml_variant : Math.random() > 0.5 ? "A" : "B";

const eventList = [];

for (const e of events) {

if (e.event_type === "commerce_event" && report_actions.indexOf(e.data.product_action.action) >= 0) {

const timestamp = Math.floor(e.data.timestamp_unixtime_ms / 1000);

const action = e.data.product_action.action;

const event_id = e.data.event_id;

for (const product of e.data.product_action.products) {

const obj = {itemId: product.id,};

eventList.push({

properties: obj,

sentAt: timestamp,

eventId: event_id,

eventType: action

});

}

}

}

if (eventList.length > 0) {

params.eventList = eventList;

personalizeevents.putEvents(params, function(err, data) {

if (err) console.log(err, err.stack);

else {

var params = {

// Select campaign based on variant

campaignArn: process.env[`CAMPAIGN_ARN_${variant}`],

numResults: '5',

userId: mpid

};

personalizeruntime.getRecommendations(params, function(err, data) {

if (err) console.log(err, err.stack);

else {

const batch = new mParticle.Batch(mParticle.Batch.Environment.development);

batch.mpid = mpid;

const itemList = [];

for (const item of data.itemList) {

itemList.push(item.itemId);

}

batch.user_attributes = {};

batch.user_attributes.product_recs = itemList;

// Record variant on mParticle user profile

if (!variant_assigned) {

batch.user_attributes.ml_variant = variant

}

const event = new mParticle.AppEvent(mParticle.AppEvent.CustomEventType.other, 'AWS Recs Update');

event.custom_attributes = {product_recs: itemList.join()};

batch.addEvent(event);

const mp_callback = function(error, data, response) {

if (error) {

console.error(error);

} else {

console.log('API called successfully.');

}

};

mp_api.uploadEvents(batch, mp_callback);

}

});

}

});

}

}

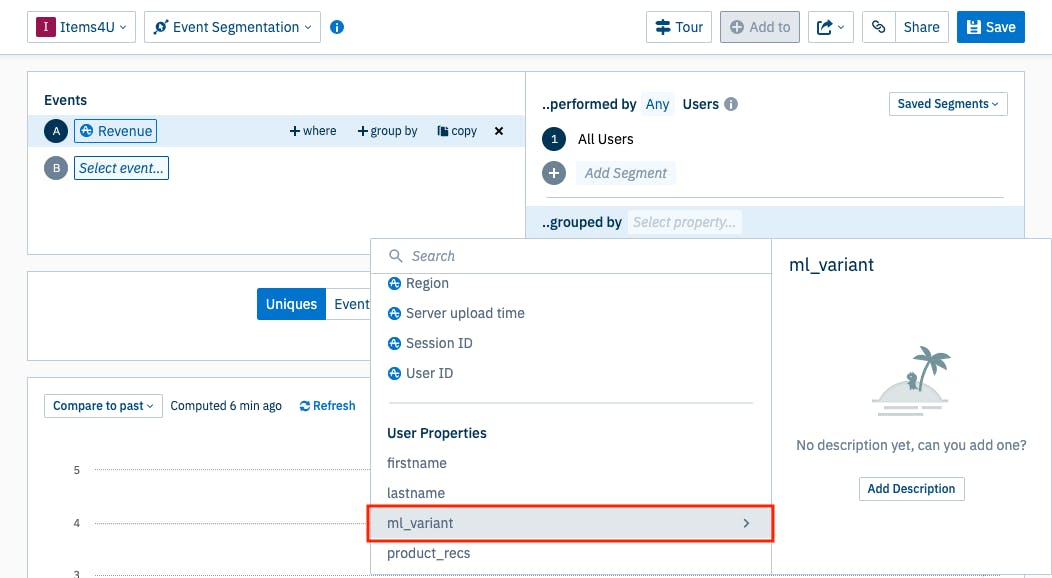

};With variant information stored on the user profile and automatically passed to Amplitude, I can now group my users by variant in any of my Amplitude charts.

User journeys are not always linear. For example: a customer might look at a recommended product on my website and not buy it immediately, but pick it up later in a brick-and-mortar store, or when they next use the native app. If I'm running my experiments and analytics on a per-device basis, I'll miss that conversion. Note that because both my analytics in Amplitude and the user bucketing for my A/B test is based on mParticle's master MPID, my A/B test is more complete than if I had bucketed per device. By using the mParticle ID, I can capture the full effect of my campaigns.

Wrapping up

In Machine Learning, as in all data-centric tasks, the right infrastructure is key. When you use a CDP like mParticle to center your data infrastructure around a single, cross-platform customer record, your ML campaigns will be faster to set up, and more effective. By using a CDP, you can:

- Train your model with data about your customers, rather than just a set of devices and browser sessions.

- Use centralized quality controls to train your model on the best possible dataset, that's consistent across all your data sources.

- Use scores and recommendations anywhere - not just on your website and apps, but in emails, push messages or even in your brick-and-mortar stores.

- Easily set up A/B tests and try out different models.

- Track the success of your ML experiences in your analytics platform.

To learn more about this use case, you can watch me demo it live with AWS on twitch.tv here.

To learn more about mParticle, and see how easy it is to collect and activate data with a CDP, you can try out our platform demo here.