How we reduced our S3 spend by 65% with block-level compression

As our customer base and platform offerings expanded over recent years, so did the cost of storing our clients’ data. We implemented a block-level compression solution that reduced our S3 spend by 65% without impacting the customer experience or client data.

mParticle turned 10 years old in July of this year. Since 2013, mParticle has evolved from an idea to an extensible platform supporting hundreds of enterprise brands across the world.

The volume of data the platform processes has also increased dramatically–mParticle now stores over 12 petabytes of client data. And as storage needs increase, so do overhead costs.

One of mParticle’s most widely-adopted features is Audiences, which allows users to define rule-based customer segments. Audiences requires access to a rich set of historical customer data, which we store on Amazon S3. Audiences continually save mParticle customers a lot of time and have supported some pretty cool marketing campaigns. But housing our customers’ long-term data was also ballooning our S3 bill, and these storage costs would continue to scale linearly as mParticle’s customer base grew.

So in 2022, we dedicated engineering resources to investigating how to optimize S3 storage costs, ultimately resulting in a block-level compression approach that generated a 65% decrease in our S3 spend.

Strategizing ways to decrease our data footprint

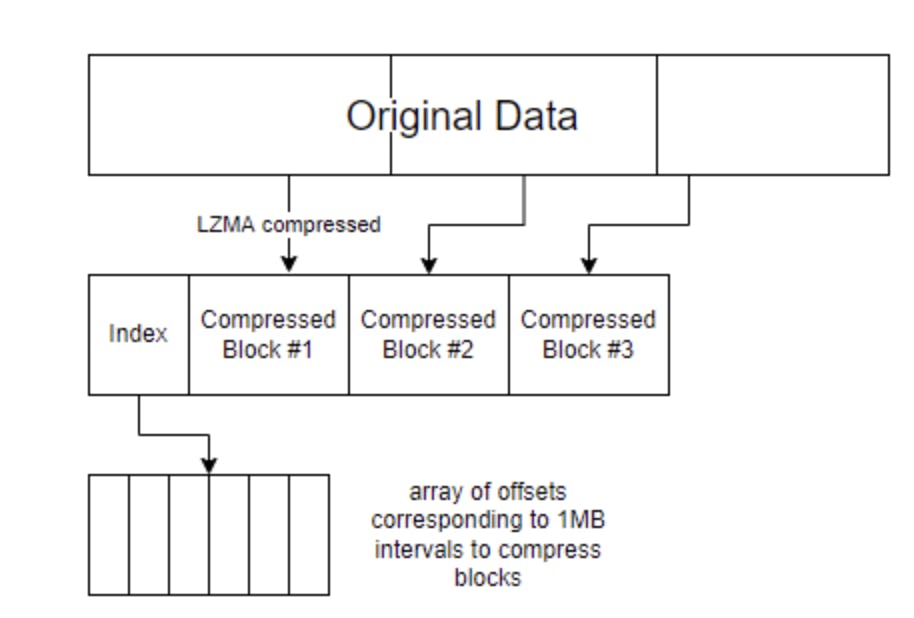

Before block-level compression, we compressed event records we received from our customers and grouped them in a single file. These files, internally referred to as UserIndex files, each had a header with information and an index that would allow us to find individual records within the file for random-record access.This yielded a modest reduction in file size, but not enough to make a significant impact in storage footprint at the scale of 12 petabytes of data.

We soon realized that UserIndex files were fairly similar and would compress well together. Instead of compressing each record and storing on a UserIndex file as is, we could wrap UserIndex files and apply a compression strategy that would allow us to reduce storage footprint without affecting the random-record access use-case in a major fashion. After some initial investigation, our team determined that compression at a block level could achieve an incremental 80%+ over our compression method at the time.

Incremental compression savings using block-level compression

| Block Size | 33kb (average record used today) |

256kb | 512kb | 1024kb |

|---|---|---|---|---|

| Compressed | 12kb | 25kb | 35kb | 54kb |

| Comp Rate | 63.6% | 90.2% | 93.8% | 94.7% |

| Incremental Compression | 0% | 73.1% | 83.0% | 85.4% |

Developing the block compression library

Our team assembled a squad of four engineers to build and unit test the compression libraries, craft integration tests, perform validation, and build dashboards to visualize and monitor the storage gains in real time.

The first major task was to develop libraries to enable block compression on all services and batch jobs that stored data on S3. The solution would need to support two use cases: sequential full-file read and random record access within the file.

Compressing entire whole files would yield a high compression rate, but it would fail to support random record access as it would require the entire file to be downloaded and decompressed. Thus we created a block compression solution that allowed us to achieve a tradeoff between compressing single records vs. compressing the whole file.

Once we identified that block-compression would reduce storage layer size without adding much latency, the next question was whether block compression could be implemented without causing any disruptions at the application layer.

To allow application services to continue to make read requests to S3, we built an S3 client interface layer. The interface layer receives the client request, determines which block the requested data is stored on, retrieves this data from the appropriate block on the server, and returns it to the client. When the interface layer is given the order to store something in S3, it divides the file into blocks of the same size, compresses them, and creates the index, storing each in S3 instead of the original file. The interface layer allowed us to maintain performance of high-volume services like Audiences throughout the entire process of building and deploying block compression.

Project execution and rollout

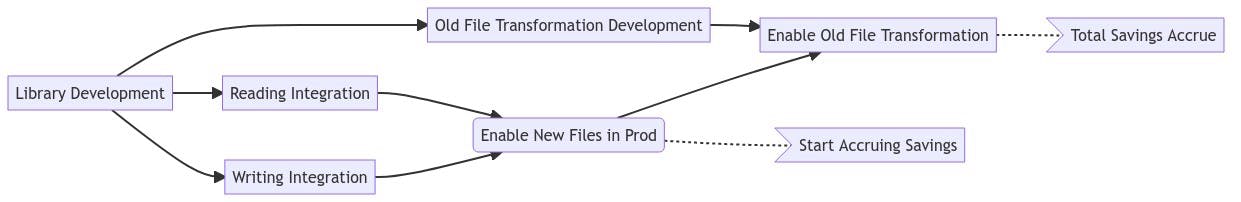

Tasked with writing, testing, and deploying the compression strategy in just six weeks, the team designed the following project flow.

Development began in late August 2022 and wrapped up in October 2022. Thanks in large part to extensive and thorough pre-project planning, the squad assigned to this initiative was able to make fast and consistent progress.

“The team was able to come together, stay in sync, and work very cohesively on this project,” says Technical Project Manager Chelsea Shoup, who oversaw and coordinated this initiative. “We had multiple meetings a day to check in on progress, and we all just swarmed it. There were no silos at all––everyone was working together very effectively. I think knowing that we only had six weeks helped keep everyone motivated and moving forward.”

“The group felt empowered to solve this problem in whatever way we deemed appropriate,” noted Staff Cloud Operations Engineer Dave Kaufman. “We had the right people, the right level of expertise, and we all executed well with a solid understanding of the problem we were trying to solve.”

After just one month post launch, we saw a 65% reduction in our S3 costs.

On the project’s post mortem call, the word “uneventful” was used on several occasions. Not to describe the work that went into planning, executing, testing and deploying, but as a reference to the end user experience while the project was in flight. mParticle customers were able to leverage all product functionality despite the work taking place “under the hood.”